Hi @Marc,

You mean like this?

I’ve done something similar in this thread, using bokeh’s lifecycle hooks:

[BUG] background thread stops when session is destroyed, leading to weird errors - Panel - HoloViz Discourse

Bear with me, as I haven’t looked at the code in months, but on a high level it should be something like this:

There’s 2 scripts:

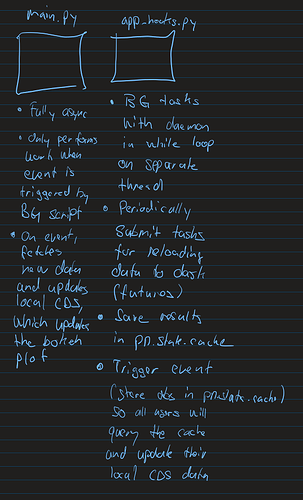

main.py (panel app), and app_hooks.py (BG script).

The BG script just runs a while loop on a separate thread, where it periodically submits tasks for loading new data to dask. The results are saved in pn.state.cache, and the panel app is notified via event trigger that is also stored in pn.state.cache. Finally, the panel app then updates the internal columndatasource (CDS) object with the new data upon being triggered by the event. This then causes all plots to refresh simultaneously, as you can see in the video.

The reason for using lifecycle hooks was because panel would unload everything from memory, upon session destruction. Not sure if this has been fixed in the meantime.

The neat part about using dask for this (apart from offloading computations) is observability.

Ideally, I’d like to use dask or redis as distributed memory cache to allow for more than just one panel process to be running.

Note: IIRC, Bokeh has discontinued the lifecycle hooks in version 3 though. So, you may need to use an older version.