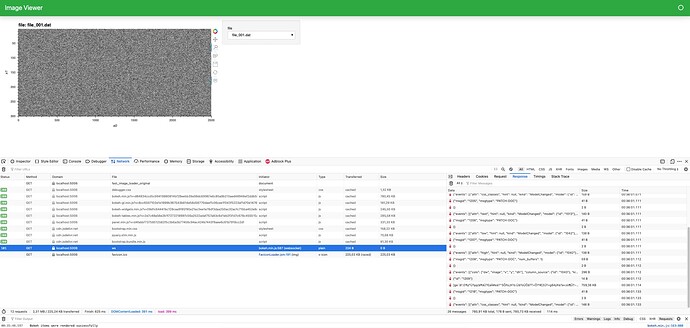

I’m trying to view data as images over a low speed network connection. The data are 2D numpy arrays of up to 1 million float elements. On start, I’m loading the image plots into a dictionary (and cache) and feeding the dictionary to a HoloMap. This appears to be the fastest option, but still a bit too laggy when scrolling though images over the slow connection.

The data come in batches and there is a time constraint. The up-front loading cost is tolerable, but it would be preferable if the images could begin displaying immediately while continuing to load.

Any suggestions for improving this design? Are there any client -side caching options? Serving static images or using compression? I have no experience with HTML or JavaScript.

Working synthetic example below. (I’m timing the data load but don’t know how to time the image render interval within HoloMap.)

import time

from multiprocessing import Pool

import holoviews as hv

import numpy as np

import panel as pn

from holoviews import opts

from holoviews.operation.datashader import regrid

hv.extension('bokeh')

pn.extension()

# set global plot defaults

opts.defaults(

opts.Image(

height=400,

width=800,

cmap='gray',

invert_yaxis=True,

framewise=True,

tools=['hover'],

active_tools=['box_zoom'],

))

def read_data(m=2500, n=300):

''' simulated data i/o function '''

data = np.random.rand(m, n)

time.sleep(0.1) # add some realistic delay

return m, n, np.random.rand(m, n)

def create_image(path):

''' create image from data '''

x0, x1, nparray = read_data()

return path, hv.Image(nparray,

kdims=['x0', 'x1'],

bounds=[1, 1, x0, x1],

extents=(0, 1, x0 + 1, x1))

# synthetic data file names

files = [f'file_{i:03}.dat' for i in range(101)]

# preload & cache dictionary of filename:image pairs

start_counter = time.perf_counter()

if 'image_dict' in pn.state.cache:

image_dict = pn.state.cache['image_dict']

else:

with Pool(initializer=np.random.seed) as pool:

result = pool.map(create_image, files)

pn.state.cache['image_dict'] = image_dict = {r[0]: r[1] for r in result}

end_counter = time.perf_counter()

print(

f"create_image: {len(image_dict)} images, {str(round(end_counter - start_counter, 4))} seconds"

)

# add image dictionary to HoloMap with interpolation

plot = regrid(hv.HoloMap(image_dict, kdims='file'),

upsample=True,

interpolation='bilinear')

# simple layout

template = pn.template.BootstrapTemplate(title='Image Viewer')

template.main.append(plot)

template.servable()

Thanks.